Week 1 – My Mind

Writing

My thoughts, by default, stream in without my control, continuously circulating, wandering, re-remembering, forgetting. I surprise myself constantly while speaking in classes that I have a vague intuition of a point to make, then when I start speaking, something comes out decently formed. But if I don’t write it down while I think of the thrust of it, sometimes the discussion in class causes thoughts to fade out. Ideas are fragile: “At the start of the process the idea is just a thought – very fragile and exclusive,” former Apple CDO Jony Ive said, about the design process.

I try to engage, when the stakes of a situation are higher than the average moment, in “structured thinking:” the equivalent of trying to impose a 2D Cartesian grid on 4D flowing cloth, trying to stick guardrails for the cloth to run into and stick around to produce a more useful, productivity-oriented outcome. This can take the form of pros/cons lists, mapping outcomes in tables, making a quick chart of the variance of outcomes over time. This is the optimistic, intentional version of my thinking that I remember disproportionately like the small percentage of meals that are salads—these moments represent the best in my logical decision-making, as opposed to the thousands of go-with-my-gut decisions I make all the rest of the day.

I self-vocalize as I read, and naturally hear my own voice as I speak. My thoughts always have my voice attached to them. But my voice is not a neutral voice—it’s one I have a great number of feelings & judgements about. To outsiders, it intones a gender, a place of origin, a level of education & socio-economic status I grew up with. To me, it intones my judgements of what is worth(y of) my saying; whether I should say it. My own worst critic is myself, by at least an order of magnitude, and as ideas begin formulating, I’m judging them before they get the slightest chance to hatch. A huge percentage of my ideas never hatch even to the first stage, and I & the world never get to see in retrospect if they were worth anything, because I shut them down before they happened.

When I’m doing something mundane or repetitive—taking a shower, vacuuming my apartment, mowing grass—I tend to have one thought at the start, then my brain loops it repeatedly throughout the experience. I’ve never discovered why or found a solution to think about something more useful without external stimuli intervening.

Subsequent thoughts are spatially connected—“adjacency” in a vague, deeply human way. This person with this attribute who makes me think of this other person then their company and what happened with them on the internet so something else that came up during the same session on Twitter so the visual design of their website so where else did I see that pattern so what if I used that technique in my project so I should call someone…it’s inexplicable. Someday, we will make a computer that can model these connections naturally, but until then, we’re stuck with “double-sided backlinks” & re-reading the original Memex essay & a prayer that maybe spatial computing will solve this, or some more ethical version of Neuralink. (Not holding my breath.)

Making

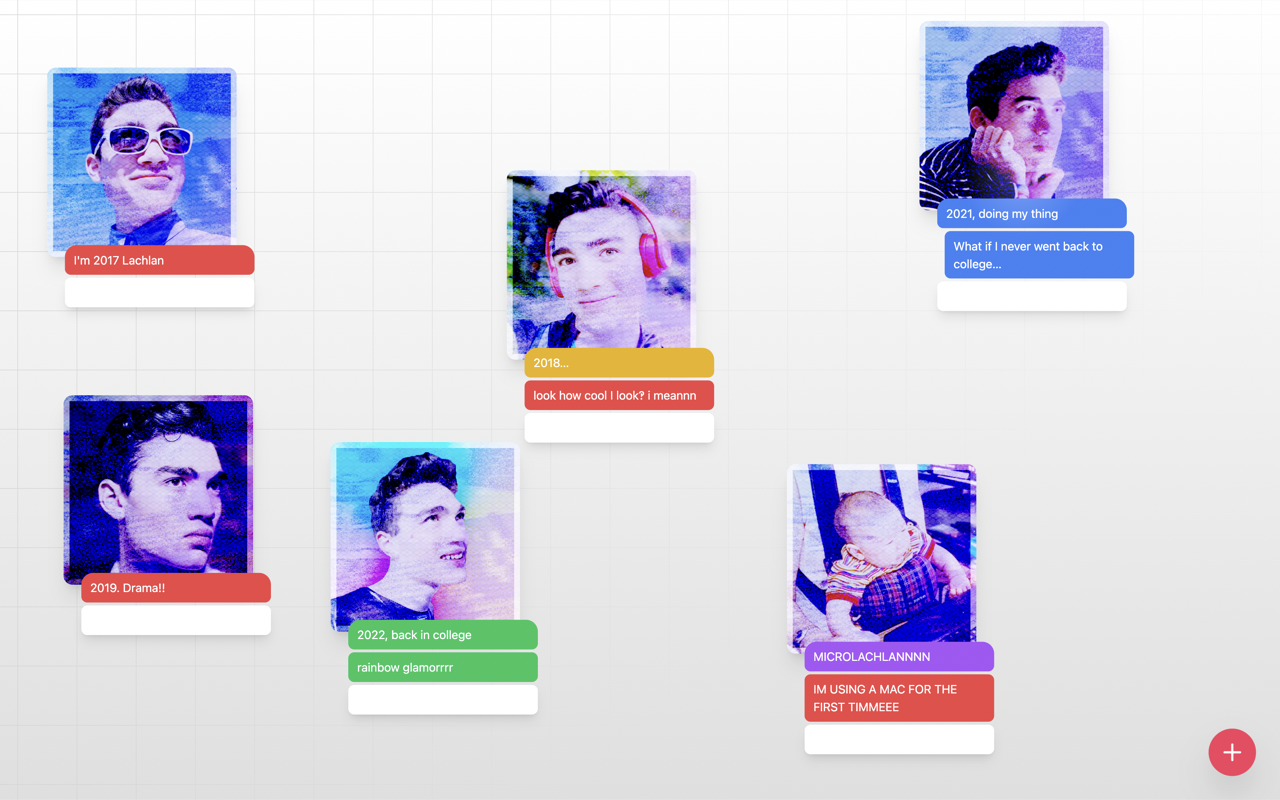

I wanted to make a little freeform canvas to upload people to: pictures of yourself from the past, or pictures of your friends, with fun image effects, bouncy animations, and to get a little dialogue going between them. You can model out a little conversation, think about what past versions of you would say/do, or write little affirmations about the people in the pictures.

I used React with Next.js, Tailwind CSS, and Framer Motion as the core technologies to get going; they’re my go-to’s. I also used Vercel to host the site.

I started by creating a new Next.js app with npx create-next-app@latest, then added Framer Motion (npm install framer-motion). I grabbed the grid background from this demo on CodePen I saw recently. For the interaction, I used a bit of React state. GitHub Copilot helped me write the code for the image upload the whole way through, which was super helpful. Managing Blob vs File and Base64 images are a bit tricky, and Copilot saved a bunch of time.

The image effects were challenging. The filter CSS property is too limited, and I wanted to do something more unexpected, like a wild passport. I actually wrote all this functionality before making the canvas, in a CodePen for simplicity. Starting with a simple textured background image, which I converted to base64:

I started with multiple canvas elements in the DOM, but I discovered the OffscreenCanvas JavaScript API, which lets you make a virtual canvas. I painted the background on, then set a blend mode to "color burn," which I discovered in Figma looked cool against this background. I then added a border over the edges for visual effect. It degrades the quality of the photo and distorts the colors. (This description doesn’t capture the two hours of trial and error in this process, and sounds like I knew exactly what I wanted from the start, but you can see how it turned out.)

The toughest part was handling images with the P3 color gamut. Initially, they were getting blown out, with all colors outside sRGB turning rgb(0 255 0) green. Trying to keep the whole project in P3 seemed more complicated than it was worth, so I worked with Copilot to write a function to convert P3 to sRGB: making another offscreen canvas, setting it to sRGB, and drawing the P3 image onto it, and getting the content out as sRGB. I passed that back into my canvas for the image manipulation, and it worked.

The drag and drop I’d never done before, but Framer Motion’s props made it super easy to feel elastic and keep elements onscreen. The chat interaction is a little half-baked, I’d love to make that feel more spatial in the future, or add some 3D to the canvas.